skada.JDOTRegressor

- skada.JDOTRegressor(base_estimator=None, alpha=0.5, n_iter_max=100, tol=1e-05, verbose=False, **kwargs)[source]

Joint Distribution Optimal Transport Regressor proposed in [10]

Warning

This estimator assumes that the loss function optimized by the base estimator is the quadratic loss. For instance, the base estimator should optimize and L2 loss (e.g. LinearRegression() or Ridge() or even MLPRegressor ()). While any estimator providing the necessary prediction functions can be used, the convergence of the fixed point is not guaranteed and behavior can be unpredictable.

- Parameters:

- base_estimatorobject

The base estimator to be used for the regression task. This estimator should solve a least squares regression problem (regularized or not) to correspond to JDOT theoretical regression problem but other approaches can be used with the risk that the fixed point might not converge. default value is LinearRegression() from scikit-learn.

- alphafloat, default=0.5

The trade-off parameter between the feature and label loss in OT metric

- n_iter_max: int

Max number of JDOT alternat optimization iterations.

- tol: float>0

Tolerance for loss variations (OT and mse) stopping iterations.

- verbose: bool

Print loss along iterations if True.as_integer_ratio

- Attributes:

- estimator_object

The fitted estimator.

- lst_loss_ot_list

The list of OT losses at each iteration.

- lst_loss_tgt_labels_list

The list of target labels losses at each iteration.

- sol_object

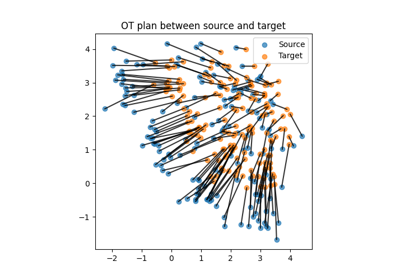

The solution of the OT problem.

References

- [10] N. Courty, R. Flamary, A. Habrard, A. Rakotomamonjy, Joint Distribution

Optimal Transportation for Domain Adaptation, Neural Information Processing Systems (NIPS), 2017.